Project Proposal

1. Motivation & Objective

Extended reality (XR) has recently driven innovation in human computer interfaces, especially in measuring the position of the fingers on a hand. Usually this fine-grained finger position tracking is done with computer vision as in the Meta Quest 3 [4]. However, vision-based finger tracking relies on the hand being in view of the camera. As a result, alternative finger posetracking have been proposed such as wristpressure [5], bioimpedence [6], and mmWave technology [7]. One of the most promising technologies is surface Electromyography (sEMG) that has even been demoed in Meta’s recent Orion augmented reality (AR) glasses anouncement that included a sEMG wristband to track certain gestures [3].

This project will attempt to create an open source framework to measure the position of the fingers of the hand for fine-grained hand pose tracking using sEMG. I will use a neural network that can encode spatial-temporal information to predict complex finger positions based on sEMG signals.

2. State of the Art & Its Limitations

In 2021, researchers out of Penn State published a paper where they used a consumer sEMG wearable to track the finger position of the user with a convolutional encoder-decoder architecture on a smartphone that they called NeuroPose [1]. However, the convolutional encoder-decoder architecture does not encode spatial-temporal information, so they had to feed 5s windows each prediction. Furthermore, the device consumer device, the Myoband [8], is no longer on the market because the company was bought by Meta [9]. Currently there is no developer friendly, fully integrated sEMG alternative to the Myoband, and research in this field has stagnated and been siloed in industry in the past few years.

In addition, the authors of NeuroPose did not release the code for public use. Although a spatial-temporal model was used, it was a rather simple RNN. With the recent advancements of neural network architectures such as structured state space models [2], I expect to see improved performance of the model compared to the baseline convolutional encoder-decoder architecture.

3. Novelty & Rationale

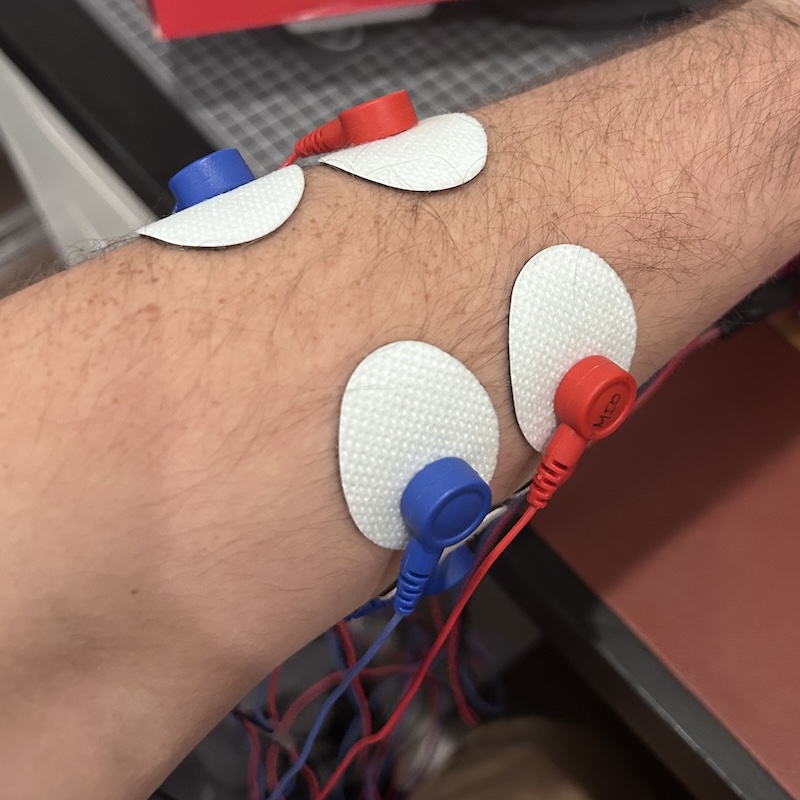

My goal is to provide an open source framework for finger pose detection building on the work of NeuroPose with added features and using available hobby grade sEMG hardware, the MyoWare Muscle Sensor 2.0 [11]. I plan to evaluate new state of the art (SotA) model architectures such as Temporal Convolutional Neural Networks (TCNs) [12] and Mamba [2] against the convolutional encoder-decoder architecture from [1]. I hope to show that with these architectures that encode spatial-temporal information in the model itself, we would not need to feed 5 second windows for each iteration of prediction like NeuroPose did.

4. Potential Impact

sEMG finger pose detection is a promising idea with many applitcations such as healthcare [13], advanced prosthetics [14], and XR interaction [3]. MyoPose, if successful, will enable greater XR immersion and a groundwork for returning amputees’ control of their prosthetics. Furthermore, if MyoPose is successful, it will create a framework for the open source community to contribute to these fields. More open source development will accelerate innovation by democratizing contributions.

5. Challenges

There are many challenges with the project including but not limited to:

Gathering Data

sEMG signals are dependent on many things including electrode placement, muscle sensor gain, hardware variabilities, and more. In addition, the ground truth relies on the Ultraleap Motion Controller depth sensor [12] that is not always 100% accurate.

Collecting sEMG Data

It will be a challenge to generate a dataset that is consistent between days and placements of the electrodes. Since the MyoWare Muscle Sensor 2.0’s electrode placement is up to the user, there can be small differences between the distances between the electrodes, which will have a very large effect on the output amplitudes.

Ground Truth Datasets

Using the Ultraleap Motion Controller as the source of ground truth will inherently introduce errors to the dataset. In fact, this was even noted in NeuroPose. However, I will still treat this as a source to truth for the model since I do not have a valid alternative.

Building and Compressing the Models

I expect there to be issues with implementing Mamba on a non-standard GPU such as a phone. Mamba’s performance stems from optimizations that rely on the memory hierarchy of modern GPUs [2]. I am unsure if a smaller model will suffer from the larger Big-O of the inference or will it not make much of a difference.

6. Requirements for Success

The following lists the required hardware and software skills to be successful with this project.

Hardware

- Ultraleap Motion Controller

- Currently deprecated. The alternative is the Ultraleap Motion Controller 2.0

Software Skills

- Arduino C++ programming

- Python programming

- Profiency with machine learning frameworks such as PyTorch

- Unity C# for Ultraleap ground truth tracking

7. Metrics of Success

MyoPose should be able to be 90% accurate to the chosen source of ground truth with low latency (<100ms) using a M2 Pro Macbook Pro. Once this is complete for the baseline encoder-decoder architecture and a novel model architecture, I will measure success by the robustness of the system by repositioning the electrodes and changing the arm position. If inference accuracy drops by less than 20%, I will consider that a success given the hardware limitations mentioned above.

If time permits, I would like to compress the model to be able to be run on a smartphone and evaluate the loss of accuracy and compare the power draw between models.

8. Execution Plan

The following are the main checkpoints that will be necessary to complete the project.

- Ground Truth Generation

- Using the finger positions, I will need to transform them into the angles of the fingers at each joint. In addition, I will need to constrain the finger angles to fit the hand skeletal model of [1].

- EMG Datacollection & Streaming

- I will have to write an Arduino program to stream the data from the sEMGs to the necessary device. The first step will be to use MQTT streaming to my laptop. Then, if time permits, I will write a BLE streamer to stream to the smartphone to remove the reliance of a WiFi connection.

- Convolutional Encoder-Decoder

- I will implement the convolutional encoder-decoder from [1] to use as a baseline to compare the novel model implementations to. I do not expect this to take a lot of time since the architeture is laid out already.

- Novel Model Exploration

- I will create and evaluate different architectures to determine if encoding spatial-temporal information in the model will have a positive effect on model performance. If time permits, I will further explore the viability of these models in low power domains such as smartphones.

- If Time Permits

- BLE streaming

- Model Compression

- Smartphone Implementation

- Performance and Power Measurements

9. Related Work

9.a. Papers

NeuroPose: 3D Hand Pose Tracking using EMG Wearables: [1] This is the main paper that I am referencing for the methodologies of creating the dataset from the Ultraleap motion controller and the baseline architecture for the neural network. The main differences between MyoPose and NeuroPose is that it uses different sEMG hardware and implements a neural network that encodes spatial-temporal information.

Mamba: Linear-Time Sequence Modeling with Selective State Spaces: [2] This the original paper that proposed the Mamba architecture. MyoPose will compare this architecture to the baseline convolutional encoder-decoder from [1].

9.b. Datasets

There are no public datasets that fit this project, so I will have to create a dataset myself.

9.c. Software

10. References

[1] Y. Liu, S. Zhang, and M. Gowda, “NeuroPose: 3D Hand Pose Tracking using EMG Wearables,” in Proceedings of the Web Conference 2021, 2021, pp. 1471–1482. doi: 10.1145/3442381.3449890.

[2] A. Gu and T. Dao, “Mamba: Linear-Time Sequence Modeling with Selective State Spaces,” 2024.

[3] “Introducing Orion, our first true augmented reality glasses,” Meta.

[4] “Meta quest 3: New mixed reality VR headset,” Meta.

[5] Y. Zhang and C. Harrison, “Tomo: Wearable, Low-Cost Electrical Impedance Tomography for Hand Gesture Recognition,” in Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, 2015, pp. 167–173. doi: 10.1145/2807442.2807480.

[6] A. Dementyev and J. A. Paradiso, “WristFlex: low-power gesture input with wrist-worn pressure sensors,” in Proceedings of the 27th Annual ACM Symposium on User Interface Software and Technology, 2014, pp. 161–166. doi: 10.1145/2642918.2647396.

[7] Y. Liu, S. Zhang, M. Gowda, and S. Nelakuditi, “Leveraging the Properties of mmWave Signals for 3D Finger Motion Tracking for Interactive IoT Applications,” Proc. ACM Meas. Anal. Comput. Syst., vol. 6, no. 3, Dec. 2022, doi: 10.1145/3570613.

[8] B. Stern, “Myo armband teardown,” Adafruit Learning System.

[9] N. Statt, “Facebook acquires neural interface startup Ctrl-Labs for its mind-reading wristband,” The Verge.

[10] “MyoWare 2.0 Muscle Sensor,” SparkFun Electronics.

[11] C. Lea, R. Vidal, A. Reiter, and G. D. Hager, “Temporal Convolutional Networks: A Unified Approach to Action Segmentation,” 2016.

[12] “Leap Motion Controller,” Ultraleap.

[13] . Song, H. Zeng, and D. Chen, “Intuitive Environmental Perception Assistance for Blind Amputees Using Spatial Audio Rendering,” IEEE Transactions on Medical Robotics and Bionics, vol. 4, no. 1, pp. 274–284, 2022, doi: 10.1109/TMRB.2022.3146743.

[14] Y. Liu, S. Zhang, and M. Gowda, “A Practical System for 3-D Hand Pose Tracking Using EMG Wearables With Applications to Prosthetics and User Interfaces,” IEEE Internet of Things Journal, vol. 10, no. 4, pp. 3407–3427, 2023, doi: 10.1109/JIOT.2022.3223600.

[15] “Unity real-time development platform,” Unity.

[16] “Pytorch Get Started,” PyTorch.